Note: This is some of troubleshooting steps I was taken to resolve the Nutanix alert "Host removed from metadata ring" and if you are unsure or not familiar with running these commands yourself please engage with Nutanix support for fixing the above alert.

A node will be taken out of the Cassandra ring and put in forwarding mode if any of the following conditions match.

- The node is completely down for 30 minutes.

- 7 flaps in 15 minutes for Cassandra restarts.

- At least 3 nodes are in the ring after the removal (if you have a 5-node cluster, two nodes might be in this state)

Auto-healing was introduced in AOS version 3.5.x to prevent taking the cluster down due to multiple node failures at different intervals.

After these conditions are met, the node is put in "marked to be detached" state and an alert is sent.

On the following AOS version, CVM in maintenance mode or not available, node/CVM is detached from ring after:

- 120 minutes - AOS 5.10.10, AOS 5.11.2, AOS 5.14 or later

- 60 minutes - AOS 5.10.8.x, AOS 5.10.9.x, AOS 5.11, AOS 5.11.1

If you can bring the node or Cassandra to an UP state before the detachment completes, this process is aborted.

If the node is removed from the Metadata ring due to a known network issue or a scheduled activity without marking the CVM in maintenance mode, then the node can be added back to the Metadata ring.

If none of the scenarios explain why the node is removed from the

metadata ring, first we have to fix the underlying issue and fix it and

then only we can add the node back to the metadata ring.

When a node is detached from metadata ring hardware view and node summary will be shown as follows.

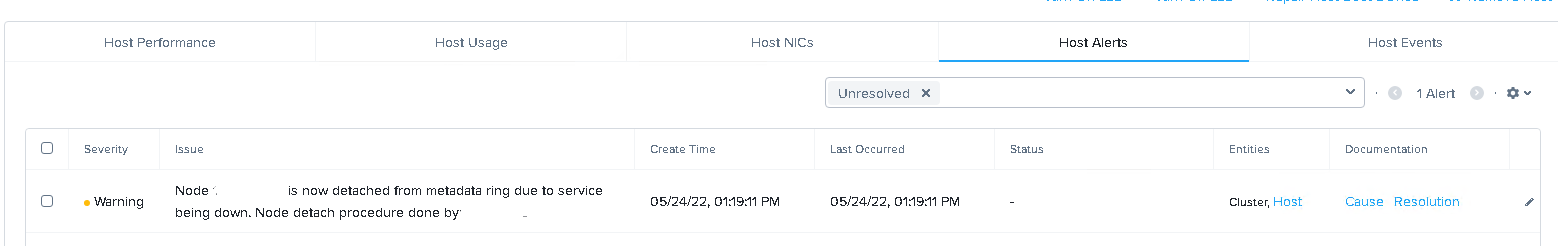

Also similar alert will be appear in Nutanix alert console.

So, lets start with troubleshooting. First check the cluster status by running following command in one of the CVM.

nutanix@cvm:~$ cluster status

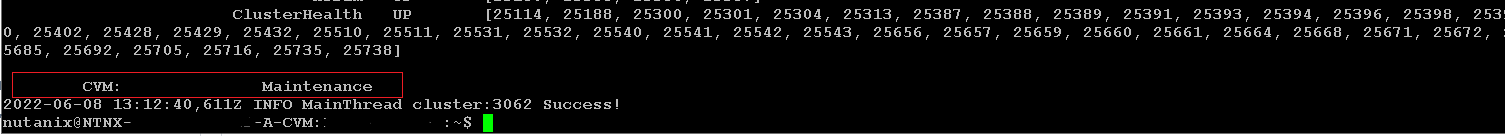

As you can see it shows that one of the CVM is in maintenance mode. So use following command to list all of the CVMs and their status.

nutanix@cvm:~$ ncli host list

So in the output we can clearly see that the node which was removed from the metadata ring, it's CVM is in maintenance mode and also above command shows that it has been removed from the metadata ring.

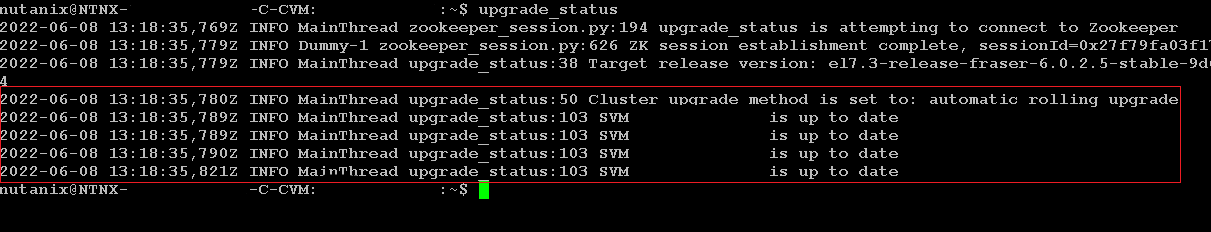

Before removing it I wanted to check cluster upgrade status to make sure there is no any maintenance activities running on the cluster.

nutanix@cvm:~$ upgrade_status

nutanix@cvm:~$ host_upgrade_status

nutanix@cvm:~$ ncc health_checks system_checks cluster_active_upgrade_check

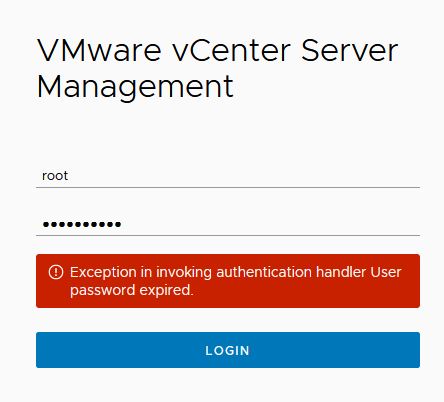

So, now I'm sure that there is no pending upgrade tasks and ready to remove the affected CVM from maintenance mode. Use following command and can find the host ID using ncli host list command.

nutanix@cvm$ ncli host edit id=<<host ID>> enable-maintenance-mode=false

Once the node removed from maintenance mode, in the prism element UI option to add the node back to metadata ring option will be enabled.

Also we can use the following command to enable the metadata store on this node.

nutanix@cvm$ ncli host enable-metadata-store id=<<host ID>>

Progress can be monitor from recent tasks.

Again, if you are not familiar with the steps above or these commands not match with your scenario please engage with Nutanix Support to resolve the issue.

1 Comments

Can be a problem using updating firmware via LCM. In this case the hypervisor restart in phoenix Recovery. In this case you must first restart in "normal mode" :

ReplyDeletephoenix / # python /phoenix/reboot_to_host.py

See : https://portal.nutanix.com/page/documents/kbs/details?targetId=kA00e000000CyF8CAK

After you can use command lines written here. Thank you !